QTAC–FAQ chatbot & voice experience

Conversation design of a chatbot and smart speaker voice apps for the Queensland Tertiary Admissions Centre (QTAC)

My role: Conversation Designer & Project Manager (client project)

Credits: TalkVia tech team

Platform: Chatbot, Amazon Alexa & Google Assistant

Overview

QTAC is an Australian non-profit organisation providing tertiary entry and application services for higher education providers in Queensland and Northern New South Wales. From December 2020, QTAC became responsible for calculating the Australian Tertiary Admission Ranks (ATARs) for high-school graduates in Queensland.

In anticipation of the high number of ATAR enquiries, TalkVia worked with QTAC to launch a website chatbot and smart speaker apps that could answer student queries and help to ease the pressure on their contact centre team.

NOTE: Many images are low-res or blurred and cannot be expanded for confidentiality purposes.

Please contact me if you would like further information.

BACKGROUND

The QTAC project had two phases:

Phase 1 was the initial five-week design and build of the FAQ chatbot and voicebots which comprised approximately 50 FAQs.

Phase 2 was the subsequent bot review and enhancement which began six months after initial launch, adding a further 70 FAQs.

Core activities and learnings for each phase of the project are summarised below.

PHASE 1: PROJECT PLANNING & RESEARCH

I project managed and led the conversation design for Phase 1 of the QTAC project during my first six weeks with TalkVia. QTAC was a new client and this was also TalkVia’s first chatbot build, alongside the Alexa Skill and Google Action.

To add to the challenge, the project had been sold with an incredibly short timeframe and a fixed deadline, and as a new employee I was still learning the full capability of the TalkVia platform.

From a project management perspective, I broke the available time into smaller milestones, scheduled regular client check-ins and escalated internally that I would require dedicated tech resources. I worked with our Business Development team to gather the client requirements and additional information required to inform the design.

The client provided user personas and commonly asked questions from their website. Although this type of content isn't ideal for translation to a voice app, I thoroughly reviewed it and was able to condense it down into a more conversational format. I referred to their existing website and fact sheet to ensure consistency of tone, and I grouped the FAQs into core categories to better understand how some questions could relate to others.

PHASE 1: IDEATION

Throughout this project, I was also standardising design assets and processes for the TalkVia team.

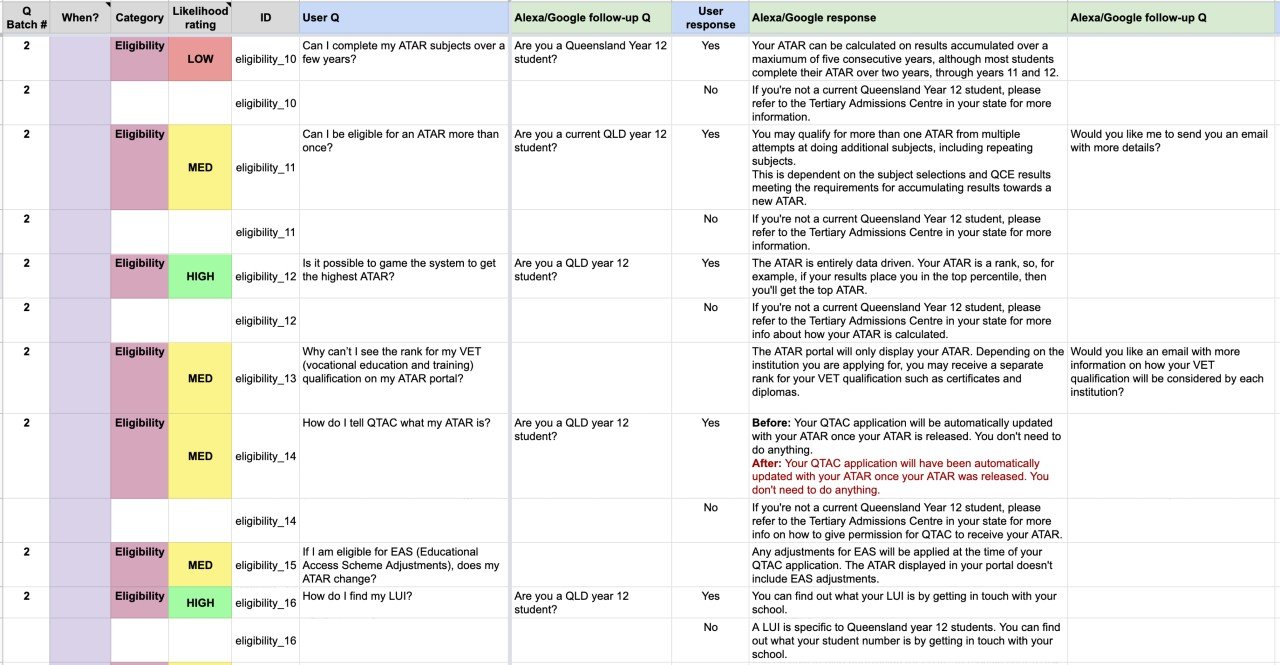

I created a spreadsheet template for the detailed conversation design into which I imported the client’s FAQs. This enabled me to clearly lay out prompt and suggestion chip copy, follow-up questions, and links to other FAQs.

The bots were to be built on our "TalkVia One" SaaS product which enables deployment to multiple platforms.

However, the platform offered limited functionality (compared our "Pro" platform), so I had to carefully consider the intents and content to be included, and how to best ensure the interactions would be conversational despite the limitations. My main concern was to avoid creating any loops for the user.

Key learning

I walked the client through the initial draft design to get early feedback. Many of the original FAQs had a follow-up question which I had removed to reduce unnecessary turns for the user and simplify the interactions.

Both the client and a colleague questioned this decision and I initially stood my ground – explaining my reasoning and conversation design best practices. However, as my colleague had an established relationship with the client and I was new to the company and project, I ultimately conceded and these questions were added back into the design.

As the conversation design specialist, I should have trusted my expertise and experience. It was later agreed that these questions were indeed unnecessary and affected the user experience, and we were able to address this in Phase 2 of the project.

PHASE 1: DESIGN & DEVELOPMENT

I always engage with the dev team early in a design phase to ensure that the vision is technically feasible. This was especially important as the content equated to 44 unique intents, which would be TalkVia's largest app so far. We collaborated closely on the creation of training phrases, NLU training and handling of recognition conflicts.

I had also requested the client to provide a priority for each FAQ, which helped us prioritise the key user intents.

The content was designed from a Voice First perspective, so I was careful to review and update the content for the chatbot. I split the copy into readable chunks, added clear suggestion chips, and also ensured there was SSML for the many acronyms, email addresses, and website URLs.

Throughout the project, I ensured that all changes were reflected in the design spreadsheet so that it remained the source of truth. This made it much easier to review and update later on, and as a reference during QA.

PHASE 1: TESTING & VALIDATION

I carried out exhaustive QA alongside our QA engineer, as I knew that I would approach testing from a different perspective. We found that with so many similar intents, a delicate balance of NLU tuning was required to ensure recognition of all user FAQs.

Following our internal QA, the client completed their own UAT before the project was signed for go live.

Key learning

The client requested to have 12 people on the UAT test panel, which was a logistical challenge to communicate test instructions and provide access to the beta apps. The large number of testers also meant a lack of ownership, so very few people actually carried out any testing.

For Phase 2, I limited the UAT panel to a maximum 5-6 people and we subsequently received far more feedback from this group.

PHASE 1: CONCLUSION

The QTAC website chatbot and Google Action were designed, developed, QA'd and approved for certification within 4 weeks of project kick-off, and the Amazon Alexa skill went live shortly afterwards.

The QTAC Marketing team jumped on board with social posts and links from their homepage.

On completion of the project I made detailed notes in a Retro-style format to note learnings for future projects.

QTAC engaged us for Phase 2 of the voice and chatbot project a few months later.

PHASE 2: PROJECT PLANNING

Phase 2 of the project began six months after initial launch. The original conversational experience was to be extended with additional FAQs to support a wider range of user requirements.

During the first phase of the project I encountered challenges with stakeholder engagement and communications. I had several stakeholder contacts, but when I emailed the group no-one would take ownership to reply.

I applied these learnings to my approach for Phase 2 by scheduling weekly check-ins and creating Slack channels for smoother comms.

I also provided more transparency of the project progress with a working timeline, Trello board and shared Google Drive.

PHASE 2: DESIGN & DEVELOPMENT

The client wanted to add significantly more content to the FAQ chatbot and voicebots. This wasn't ideal as the NLU was already somewhat fragile, and we'd had issues with confusability of similar FAQs during Phase 1. Unfortunately neither I nor the tech team had been engaged for input during the sales process so we had to do the best we could.

I first cross-checked the new FAQs against the original content to identify any duplication. I rephrased most of the content, removing unnecessary clarifications and adding relevant follow-up questions or suggesting email content to the user.

To add complexity to the build, we had to call out to our "Pro" platform to handle some intents where there was particular confusability. For example, some intents required disambiguation to determine which FAQ the user should hear (i.e. application for course vs. scholarship, email address for website vs. ATAR portal, etc).

Furthermore, Amazon Alexa has a limit of approximately 50 one-shot phrases, so we had to priorituse which intents could be invoked via one-shot (as we ultimately had close to 120 intents).

I also reviewed the analytics data from the initial launch and we used these findings to make additional improvements. I added several floating intents to handle requests such "are you a bot”, “speak to a human/real person", "how do I contact you", etc, which weren’t catered for initially.

Throughout the content review and design I engaged with the TalkVia tech team for feedback and held client walkthroughs. I also worked closely with an agent from the client's contact centre team who provided me with expert guidance on content and potential training phrases.

PHASE 2: TESTING & VALIDATION

The core build work took only 2-3 weeks so we were able to commence our internal QA from week 6–first on the chatbot, then the Google Action, and finally on the Amazon Alexa skil (which happened to be where we had most recognition issues).

The QA effort was significant–taking close to 2.5 weeks–before we could hand over to the client for their extensive UAT. The client UAT process was also drawn out significantly longer than expected due to resourcing and conflicting client responsibilities, but the updated apps were finally submitted to and approved for certification on both the Amazon Alexa and Actions on Google platforms.

PHASE 2: CONCLUSION

As I was writing up this case study, QTAC again engaged the TalkVia team for further enhancements to their chatbot and voice apps, with a view to a potentially much larger scale project with their contact centre and IVR connectivity.